The Apprenticeship Paradox

Need experience to supervise AI. But AI eliminates the roles where experience is gained.

The Junior Doctor's Dilemma

In 2024, a medical school dean shared what she called "the most troubling trend in modern healthcare":

"Our residents are graduating with better test scores than ever. Their AI-assisted diagnoses are more accurate. But when the system goes down, they freeze. They've never reasoned through a case from first principles."

The problem wasn't the technology. It was the training loop.

For a century, junior doctors learned by doing. They examined patients. Made preliminary diagnoses. Got corrected by senior physicians. Made the same mistakes less often. Developed pattern recognition the hard way.

Now AI handles the pattern recognition. Junior doctors validate outputs. They're more accurate because they're not doing the reasoning. They're approving the reasoning.

But you can't supervise what you never learned to do.

That's the Apprenticeship Paradox.

The Expertise Pipeline

Expertise doesn't appear spontaneously. It develops through a pipeline:

Novice → Does basic work → Learns from errors → Intermediate

→ Handles complexity → Develops judgment → Expert

→ Supervises juniors → Transfers knowledge → [Next generation]

Every stage requires the previous one. You can't skip steps. You can't shortcut experience with lectures. The pipeline is sequential.

AI breaks the pipeline at stage one.

If novices don't do basic work (because AI does it), they never learn from errors. If they never learn from errors, they never develop judgment. If they never develop judgment, they can't become experts. If they can't become experts, there's no one to supervise the next generation.

The pipeline collapses, but slowly. The experts are still here—for now. The crisis is invisible until the last experts retire and no one's left who learned the old way.

Where This Is Already Happening

Law

Entry-level work (document review, due diligence, initial research) is increasingly automated. New associates spend more time managing AI outputs than learning the craft.

One partner told me, "Ten years ago, by year three, associates understood contracts in their bones. Now they understand how to prompt. That's not the same thing."

Finance

Junior analysts used to build models from scratch. Learn why each assumption mattered. See what happened when they got it wrong.

Now they validate AI-generated models. Faster, more accurate—and nobody understands the models deeply enough to spot when the AI's assumptions are wrong.

Software

Copilot and its successors write more code than junior developers. Productivity is up. But engineers who learned with AI struggle when they hit novel problems the AI hasn't seen.

One tech lead: "They're great at prompting. They can't debug. Debugging requires understanding what went wrong. They never wrote the code that broke."

Why This Is Unsolvable Individually

Here's the trap: the Apprenticeship Paradox is a collective action problem.

Every organization faces pressure to automate entry-level work. The gains are immediate: lower costs, faster output, fewer errors. The losses are delayed: expertise erosion takes years to become visible.

If Organization A maintains apprenticeship roles while Organization B automates, B wins in the short term. A looks inefficient. A faces pressure to follow.

Rational individual choices lead to collective catastrophe.

The industry automates. The pipeline breaks everywhere. And when the current experts leave, everyone discovers simultaneously that no one knows how to do the work.

The Supervision Bottleneck

There's a balancing force: the Supervision Bottleneck.

AI makes errors. Some errors are subtle. Catching them requires expertise.

The more work AI does, the more supervision it needs. But supervision requires experienced humans. And if the pipeline is broken, there are fewer experienced humans to supervise.

The math doesn't work. Either you invest in building expertise (which requires slowing down the automation), or you accept undetected errors at scale.

Right now, organizations are choosing the errors. They just don't know it yet because errors accumulate invisibly until they cascade.

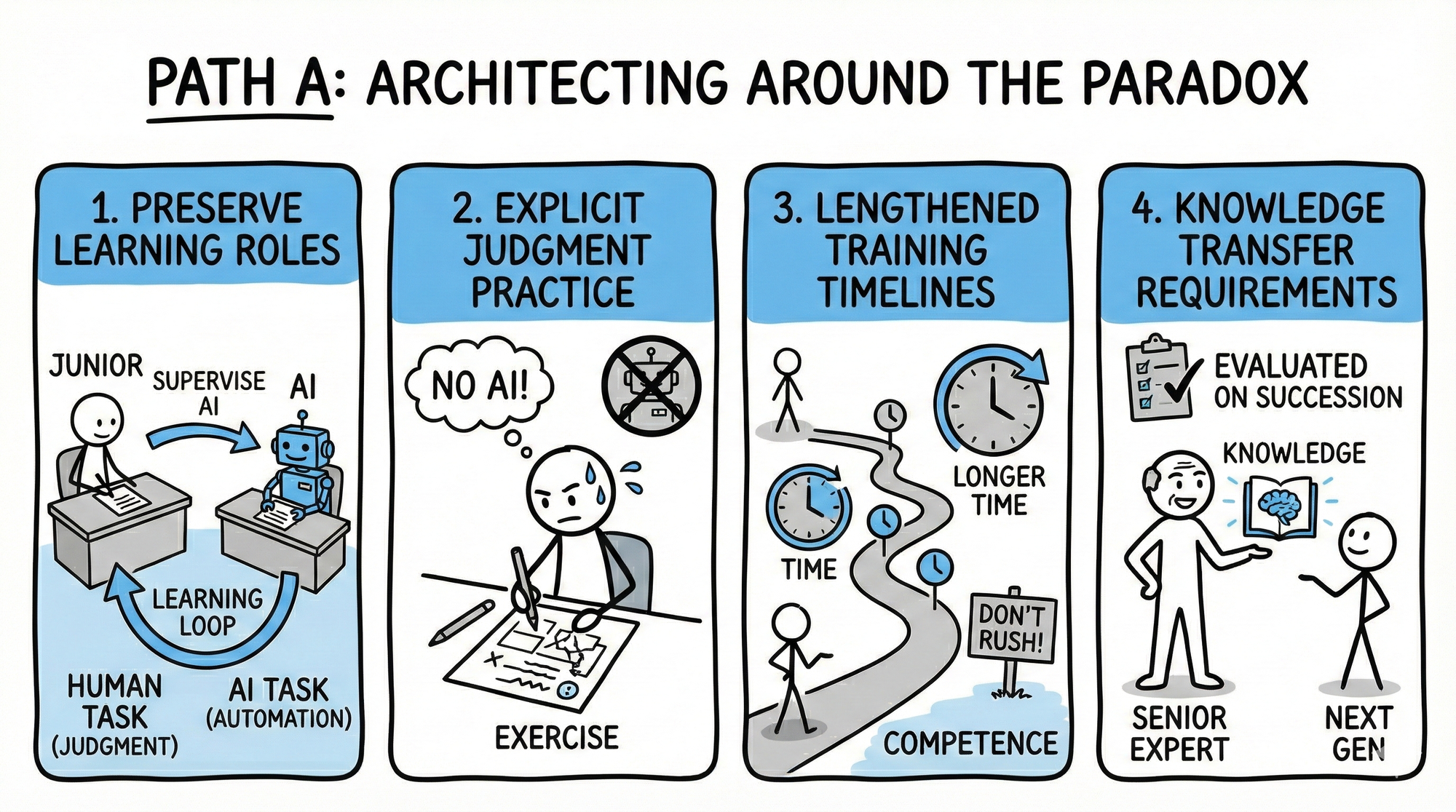

What Path A Organizations Do

Some organizations see this coming. They're architecting around the paradox.

Preserved learning roles. Entry-level positions are redesigned, not eliminated. The work changed, but the learning loop stayed. Junior people don't do what AI does—they do what AI can't, and they're trained to supervise what AI does.

Explicit judgment practice. Regular exercises where AI is unavailable. Force people to reason without the crutch. Make the atrophy visible so it can be corrected.

Lengthened training timelines. Accept that competence takes longer when the learning opportunities are scarcer. Don't rush people into "supervising" roles they're not ready for.

Knowledge transfer requirements. Senior experts don't just do their own work. They're evaluated on how well they've prepared the next generation. Succession isn't optional.

The Uncomfortable Truth

This paradox may not be solvable without intervention.

If market forces push everyone toward Path B (automate entry-level, defer the consequences), the industry-wide expertise collapse is coming. Smart organizations will build moats by being exceptions, but they can't fix the industry.

Policy may be required. Licensing requirements that mandate human training hours. Liability rules that make expertise erosion dangerous. Professional standards that protect the pipeline.

This isn't a technology problem. It's a coordination problem. And coordination problems don't solve themselves.

The Standard

The Apprenticeship Paradox is a test of whether we're serious about the future.

Automating entry-level work feels like progress. It's faster. It's cheaper. It's more accurate right now.

But expertise is a non-renewable resource if the pipeline breaks. Once the people who learned the old way are gone, you can't get them back.

The question isn't whether AI can do entry-level work. It can.

The question is: where are the next experts coming from?

If you don't have an answer, you're on Path B. And the collapse is already in progress—you just can't see it yet.

"Need experience to supervise AI. But AI eliminates the roles where experience is gained. That's not a paradox you solve with technology. That's a paradox you solve with intention."

This post explores the Entry-Level Paradox, a Path B consequence from The Context Flow. The pipeline breaks before anyone notices.