The Context Flow

Whether AI makes you better, makes you worse, or makes you capable of the impossible comes down to a single variable.

Three Thousand Dots

Each one pressed by hand into a clay mold. One at a time.

Nobuho Miya is a third-generation kettle maker in Iwate Prefecture, Japan. His family's Kamasada workshop has operated since the Meiji era. The kettles he produces, Nanbu Tekki, were the first craft Japan's government ever designated as a national traditional craft.

They sell for $500 to $3,000.

A factory version costs $80.

Both boil water.

The difference isn't function. Miya's kettle is fired over charcoal in a process called kanakedome, an 800-degree transformation that no enamel coating replicates.

The arare pattern on the surface isn't decoration. It's roughly 3,000 individual decisions, each pressed into wet clay before the iron ever flows. Seventy percent of production time goes into building the mold. A form he'll use once, maybe twice, before it's destroyed.

You can't automate those decisions. Not because the technology doesn't exist. Because the decisions require a reservoir of judgment that only accumulates through decades of pressing dots into clay.

You already sense that something like this is being lost. Not the kettles. Something beneath the kettles.

You're right.

The Variable Nobody Measures

The mechanism beneath the kettles has a name. It operates everywhere humans work alongside powerful tools. And it operated long before artificial intelligence existed.

Who provides the framing?

That single question predicts the outcome more reliably than the quality of the tool, the skill of the operator, or the size of the investment. Ask it of any task, any team, any industry. The answer is the same.

You chose a direction this morning. When you opened your laptop and started your first task, you either framed the problem and reached for AI, or you reached for AI and evaluated what came back.

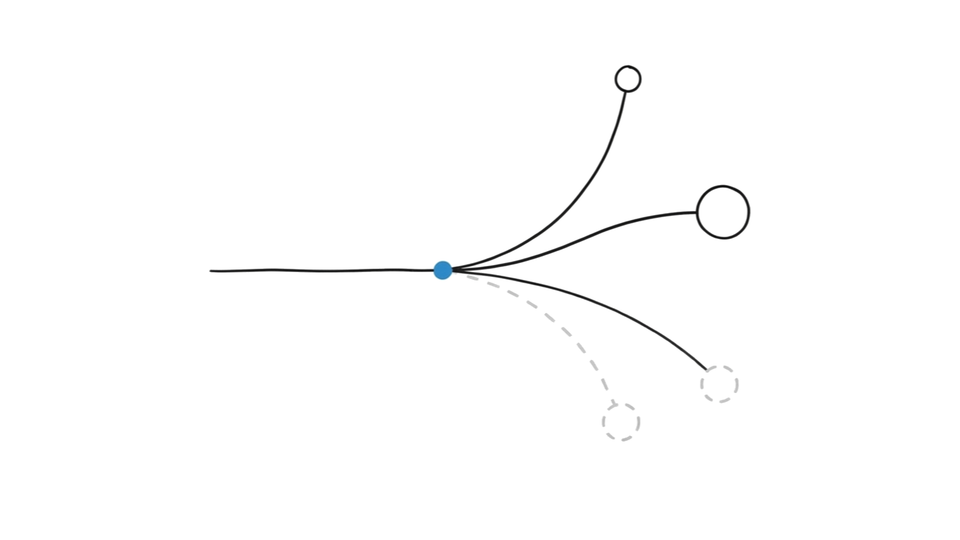

One direction builds something. The other erodes it. A third, available only after the first is mastered, creates what neither human nor machine could produce alone.

Three paths. One variable. Everything downstream follows.

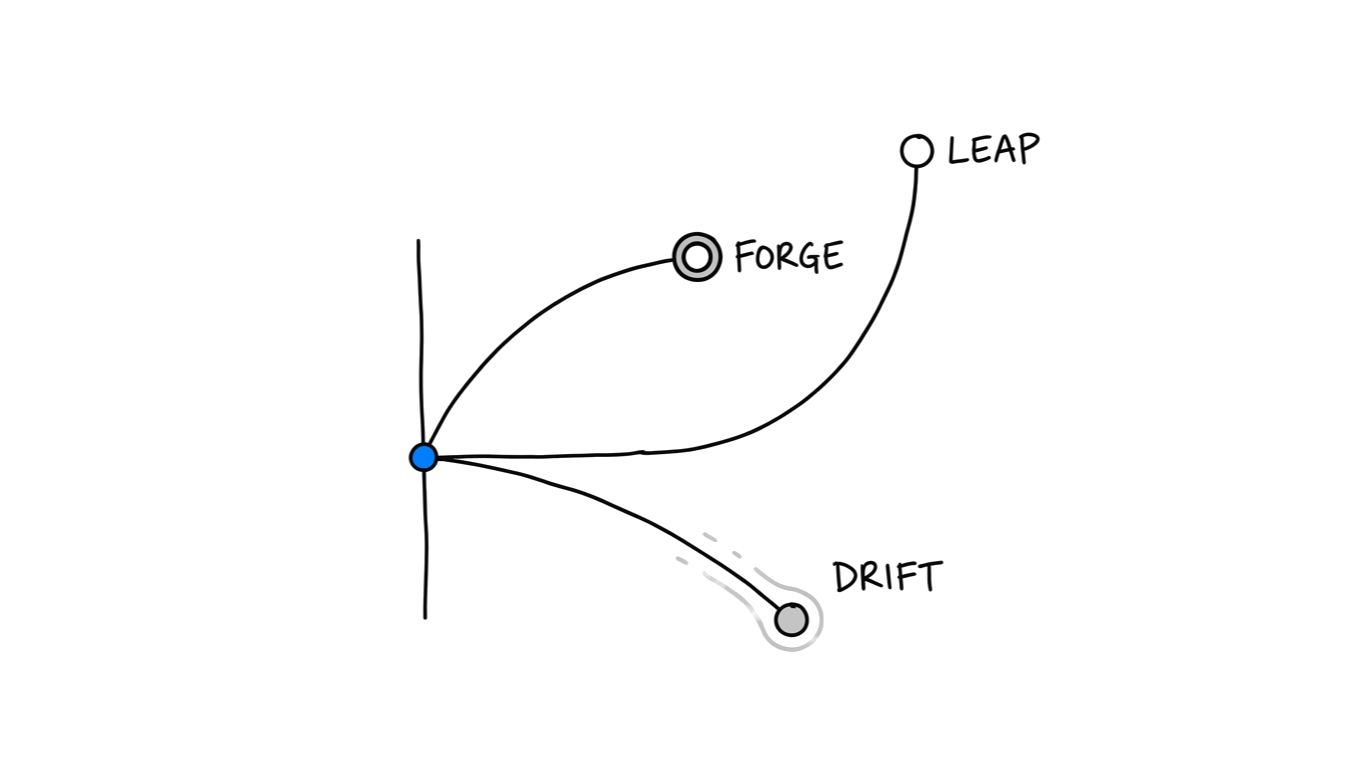

The Forge

In Maikel Kuijpers' research, something unexpected emerged.

The Leiden University archaeologist had spent years studying Bronze Age metalworking. But when he visited contemporary artisan workshops for his documentary The Future is Handmade, the observation that stopped him wasn't ancient.

"When people work with their hands, quality can't be rushed. Nor can it be faked."

He described an atmosphere of order that arose from a shared sense of hierarchy among the artisans. Not imposed hierarchy. Visible hierarchy.

Masters didn't need to say they were the masters. It was obvious in the work.

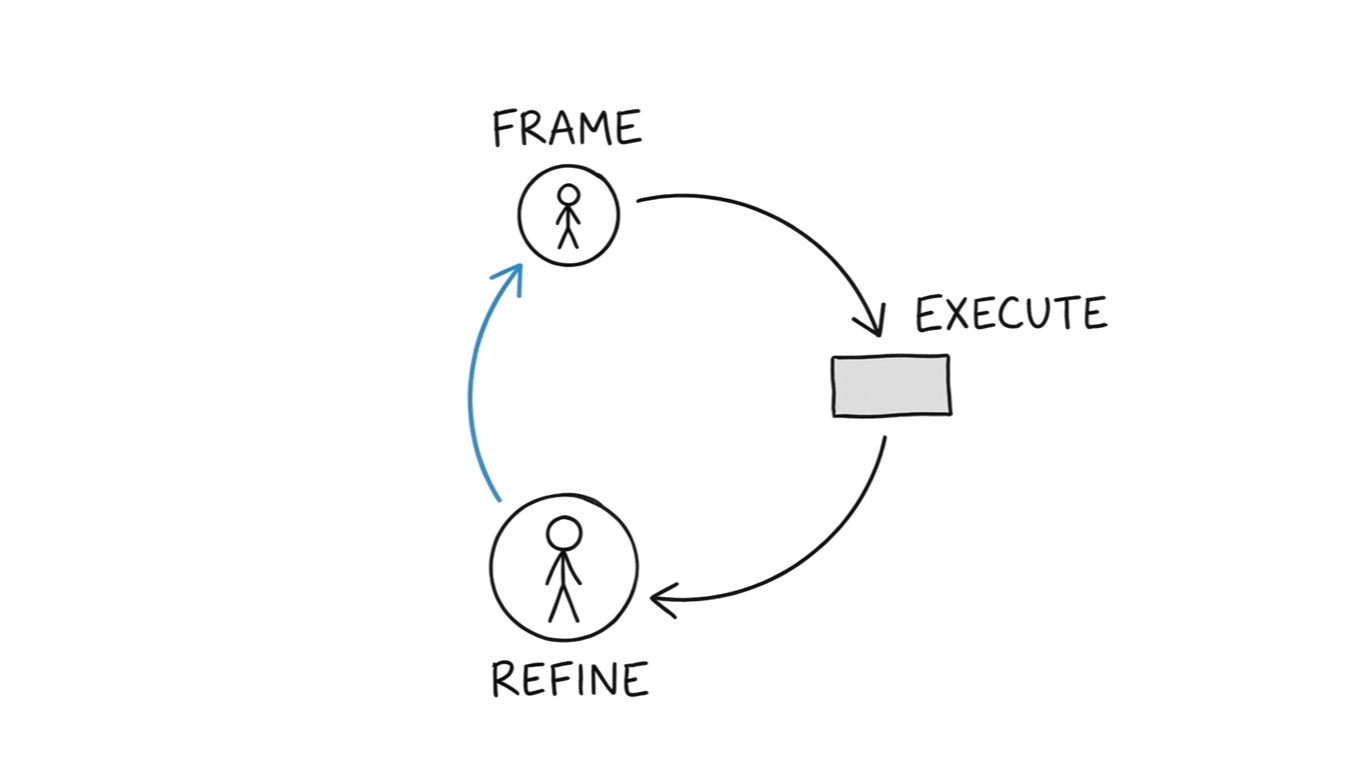

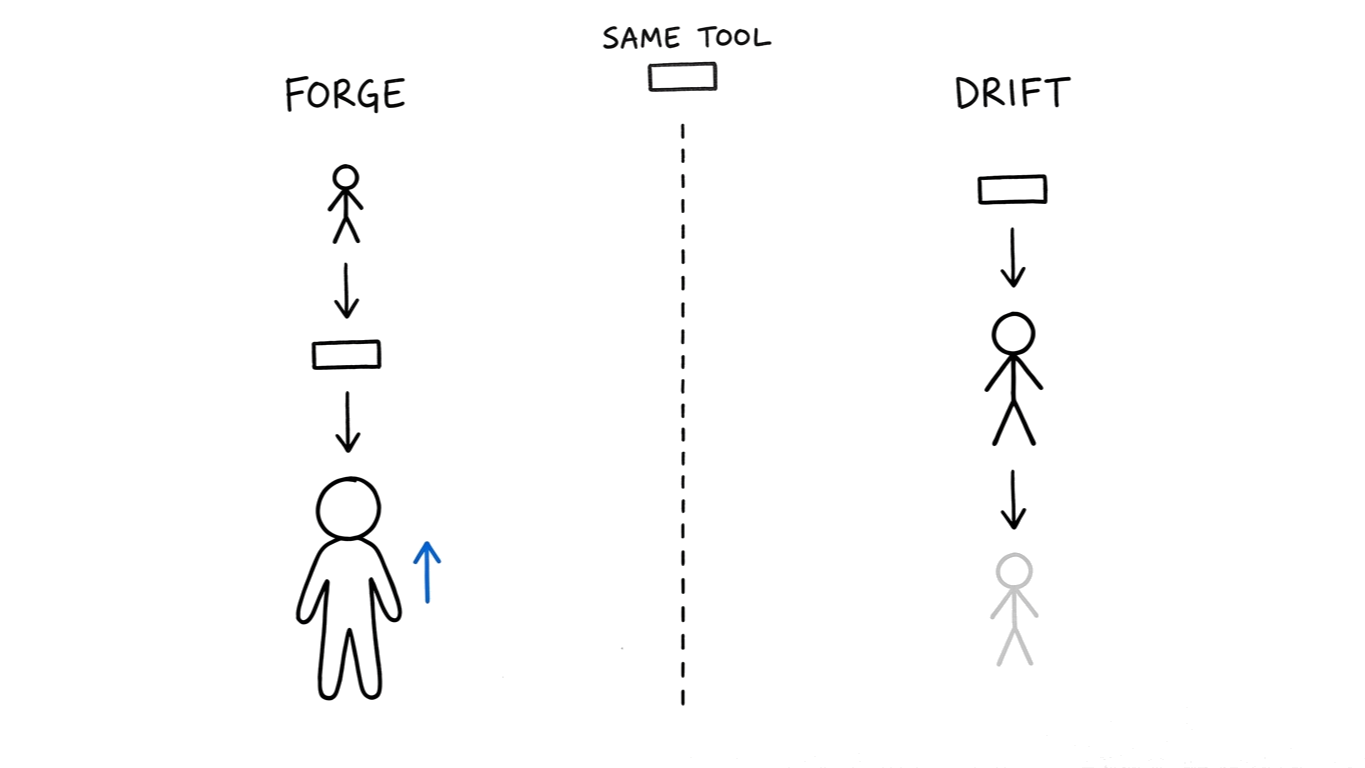

This is The Forge. Human frames first. Tool executes second. Human refines third.

In 2016, Geoffrey Hinton made a prediction at a machine learning seminar in Toronto that sent shockwaves through medical education. The godfather of deep learning told the room: "People should stop training radiologists now. It's just completely obvious that within five years, deep learning is going to do better than radiologists."

A decade later, we have more radiologists than ever.

Not because the AI failed. It got spectacularly good.

But the practices where doctors formed hypotheses first and then used AI to test them didn't just survive. They got sharper. The AI didn't replace their judgment. It gave their judgment more surface area.

The same pattern appeared in a chess tournament in June 2005.

The PAL/CSS Freestyle event on Playchess.com let anyone compete: grandmasters, supercomputers, or human-AI teams using any combination of tools.

The winners were Steven Cramton and Zackary Stephen. USCF ratings: 1685 and 1398. Twentysomething amateurs from New Hampshire running three consumer-grade PCs with off-the-shelf chess engines.

They beat a Russian grandmaster in the final. They outlasted the Hydra supercomputer.

Garry Kasparov, writing in The New York Review of Books, identified what happened: "Weak human + machine + better process was superior to a strong computer alone and, more remarkably, superior to a strong human + machine + inferior process."

The amateurs won because they knew which engine evaluated which type of position best. They spotted when the software fell into the horizon effect, the blind spot where an algorithm can't see consequences past its search depth. They split analysis across engines and compared findings.

Their advantage wasn't chess skill. It was meta-cognition about their tools.

The Forge is where expertise compounds. Not despite the tool. Through the tool.

The Drift

Destin Sandlin, an engineer who runs the YouTube channel SmarterEveryDay, conducted an experiment with his welder friends. They mounted gears on a bicycle's head tube so that turning the handlebars left steered the wheel right.

Nobody could ride it. Not one person who tried. Even knowing exactly what the modification did.

Sandlin practiced for eight months. Less than five minutes a day.

One morning, it clicked. Not gradually. Binary. One day he couldn't ride the backward bicycle, and the next day he could.

Then he tried to ride a normal bicycle.

He couldn't.

For twenty minutes in Amsterdam, the man who'd ridden bikes his entire life couldn't steer one. His neural pathways had rewired. The old skill returned after twenty agonizing minutes. But the fact that it could vanish at all reveals something about expertise that most organizations ignore.

Skills you don't exercise don't just sit there waiting. They degrade.

Now picture two doctors. Both use the same AI diagnostic tool. One uses it to surface possibilities she hadn't considered, then applies clinical judgment. The other treats it as the answer and confirms.

Same tool. Same hospital.

After a year, the first doctor catches more edge cases, sees more patterns, makes sharper calls. The second has become a rubber stamp. She can't explain why she disagrees with the machine. Because she never practices disagreeing.

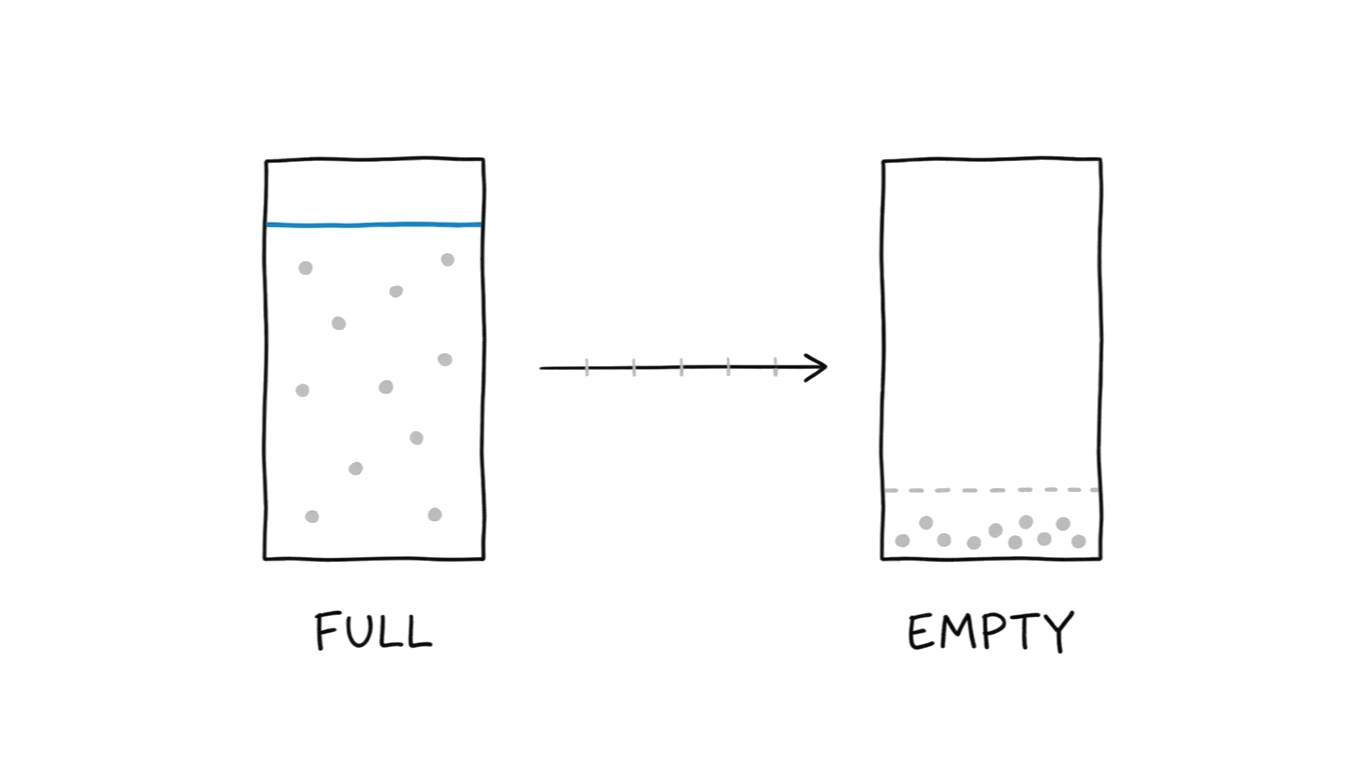

This is The Drift. AI generates, human approves, expertise atrophies.

The metrics look fine. Productivity is up. Quarterly reviews glow.

But each month, the reservoir drops. Each month, the people who could catch the errors lose the ability to catch them.

The frontier stays jagged. AI still fails unpredictably. The humans who could see the jagged edges are going blind.

By the time someone notices, the people who could fix it are gone.

Sandlin's six-year-old son learned the backward bicycle in two weeks. Children rewire faster than adults. Organizations that have drifted for years will find that rewiring takes longer than the drift did.

Who Goes First

The Forge and The Drift use the same technology.

The difference is one design choice: who goes first.

That's the entire theory. Not which AI model you buy. Not how much you invest in training. Not whether your industry is "ready."

One variable: the direction in which meaning flows at the moment of engagement. It determines whether expertise grows, erodes, or expands into territory that didn't exist before.

The interface between human and AI is a fork, not a spectrum. A small change at that junction cascades into completely different trajectories.

Who frames. Who evaluates. Who learns.

Cramton and Stephen used the same chess engines available to every other team. The radiologists used the same AI. Miya uses the same iron.

This isn't about becoming a Luddite. Every story in this piece involves people using powerful tools.

The kettle maker uses fire. The chess amateurs used software. The radiologists used neural networks. All of them went first.

The question was never whether to use the tool.

The question was whether the human provided the framing first.

The Leap

Once The Forge is stable, a third path opens.

In November 2020, DeepMind's AlphaFold solved protein structure prediction at the CASP14 competition. A problem that had consumed entire PhD careers. The system predicted shapes in minutes that had taken researchers years.

AlphaFold didn't replace biochemists. It gave them reach.

Scientists who understood which proteins mattered and why could now ask questions that were physically impossible to answer before. They provided the meaning context. The AI supplied capability at a scale no human could match. The human supplied judgment at a depth no AI could reach.

This is The Leap. Work that couldn't exist without both. Not faster versions of old work. New work.

The missing middle between what humans do and what AI does. The territory where combination creates something neither produces alone.

But The Leap requires The Forge as foundation. The moment human framing disappears, The Leap collapses into The Drift. The machine generates more, but nothing means anything.

This is why the direction matters more than the destination.

The Fair Objection

There's a legitimate counter-argument.

In domains where output is objectively verifiable, the direction of flow matters less.

Code that compiles. Math that checks. Experiments that replicate.

The output is the quality signal. You don't need human framing to know that 2 + 2 = 4.

This is the calculator objection, and it has real force. Programming, data analysis, formal logic: these domains have built-in verification that reduces the need for human judgment as gatekeeper.

The theory holds strongest where output quality is subjective, where expertise takes years to develop, and where the frontier is widest: medicine, law, strategy, creative direction, consulting, leadership. Domains where the question "is this good?" requires judgment that only comes from doing the work.

In 2023, lawyers Peter LoDuca and Steven Schwartz submitted a brief to federal court citing multiple cases that didn't exist. ChatGPT had generated them. The lawyers were sanctioned $5,000 under Rule 11.

Not because the AI failed. Hallucination is a known property of large language models. Because the lawyers had never built the judgment to evaluate whether the citations were real.

They were on The Drift. And the frontier caught them.

The Silent Carrier

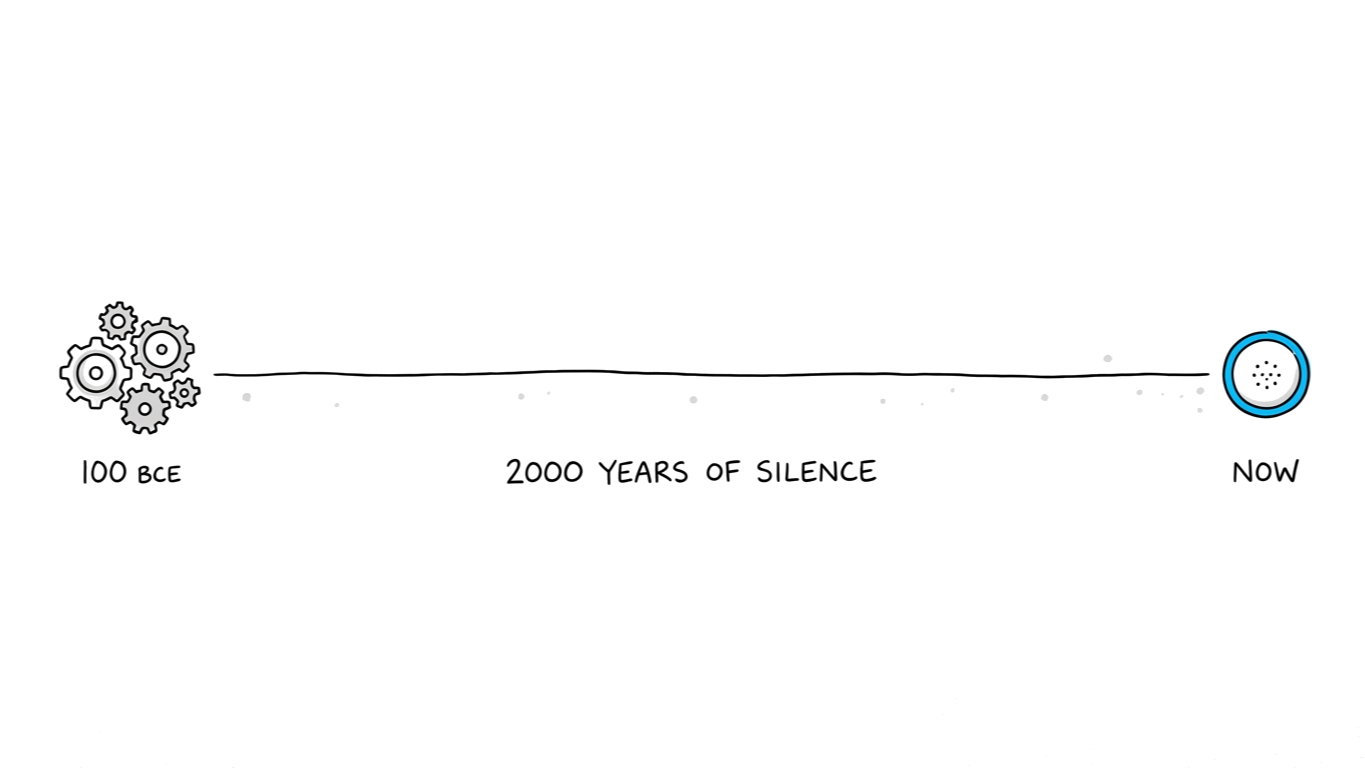

In the waters off Antikythera, a Greek island, divers in 1900 recovered fragments of a mechanism built around 100 BCE. More than thirty interlocking bronze gears. It could predict eclipses and track calendar cycles. Nothing of comparable complexity would appear again for over a thousand years.

The knowledge was in the mechanism. But when the people who understood it were gone, the knowledge went silent. It waited two millennia for someone skilled enough to read it again.

Chris Budiselic, a mechanical engineer in Australia, has spent years reconstructing the Antikythera mechanism on his YouTube channel Clickspring. Period-correct tools. Period-correct materials. In the process, he discovered a 354-day lunar calendar ring that scholars had previously dismissed as a mistake.

His finding was published as a peer-reviewed paper.

He could read the mechanism because he'd done the work with his hands. The knowledge was always there. It needed a human reservoir deep enough to receive it.

Nobuho Miya presses dots into clay because his grandfather pressed dots into clay. The knowledge lives in the pressing. Not in any manual. Not in any specification.

In the 3,000 individual decisions that make each kettle unrepeatable.

AI is the most powerful tool humans have ever built.

The Forge compounds its power. The Drift lets it hollow you out. The Leap takes you somewhere neither of you could go alone.

The only question is who goes first.

Explore Further

The engine has nine moving parts. Master them in sequence:

Cramton and Stephen didn't win with better software. They won because they understood what each engine couldn't see. The Jagged Frontier shows why AI's failure boundaries are permanently unpredictable. And permanently require human expertise to navigate.

The two doctors used the same tool. One got sharper. One went blind. Use It or Lose It traces the neuroscience of expertise atrophy. And why AI makes the decay invisible.

The lawyers who cited fake cases never built the judgment to catch the error. The Apprenticeship Paradox explains why eliminating junior roles destroys senior expertise on a timeline nobody is tracking.

The factory kettle costs $80. The handmade one costs $3,000. Both boil water. The Button Problem asks the question markets haven't answered yet: when effort disappears from the output, what signals value?

Browse all notes: Hybrid Intelligence →